- You are here:

- GT Home

- Wireless RERC

- Home

- Projects

- DEVELOPMENT PROJECTS

Project Directors: Maribeth Gandy Coleman, Ph.D. (GT) and Bruce N. Walker, Ph.D. (GT)

The Wirelessly Connected Devices project will investigate cross-cutting themes of interfaces, interconnectivity, and interactivity for next-generation wirelessly connected devices. Specifically, wearable device solutions and advanced auditory device solutions for people with disabilities and those who can most benefit such as those with sensory, dexterity, or cognitive challenges will be developed.

Task 1: Wearable Devices and Connectivity

Lead is Georgia Tech, Maribeth Gandy Coleman Ph.D.

Wirelessly connected, wearable computing may offer people with disabilities tools to further employment, community participation, and independent living outcomes, whether through contextually aware, “just-in-time” information, or support for tasks such as use of public transportation or cueing of work tasks. Wearable systems will need to adapt to the user and the constantly changing contexts of use, via personalization and reconfiguration options that can adjust to user abilities and preferences, changing environmental conditions, tasks, and social settings. However, current-market wearables largely remain constrained by passive sensing, requirements for literal on-body interaction, and reliance upon small screens or visual displays, all of which characterize the fitness trackers that dominate the market for wearables. Currently available devices offer few, if any, input and output (I/O) options beyond watches with small visual displays (and simple tactile output) and touch input, and there are just a few head-mounted display options. Wearable I/O solutions must use a diversity of output modalities, body placements, and interaction methods to realize their potential benefit for people with disabilities.

This project will build upon the social and cultural design research findings. It will create new wearable I/O devices (display and/or user-interface) that are accessible and contextually useful. These could include wearable input devices based on gesture, eye movement, haptic signals, or responses to the body’s electrical signals. After prototype development, the project team will engage in activities to determine the appropriateness of two tested and refined wearable devices in public Internet of Things (IoT) settings and Whole Community environments. The focus will not be on particular “devices” but rather on the types of wearable “services” that support what users with disabilities will require or desire.

Task 2: Advanced Auditory Assistive Devices

Lead is Georgia Tech, Bruce N. Walker, Ph.D.

Wireless technologies’ increasing reliance upon visual interfaces may undermine usability and usefulness in mobile contexts. Furthermore, dependence on both eyes and hands for effective, full use renders wireless devices inaccessible to some users with disabilities. For blind or low vision users, auditory-based, eyes-free, hands-free interactions may offer improvements in accessibility while enhancing usability and safety for them and other users more broadly. Although wireless technologies increasingly offer auditory features, their design has failed to keep pace with advances in visual displays and features: they are slow and inefficient to use, and they do not realize the full potential of audio on modern devices. There is a need to develop enhanced and optimized auditory interfaces—including, but not limited, to next-generation auditory menus and speech—with efficient hands-free, eyes-free control, with accompanying adoption of standards throughout the industry. Leveraging the user’s viewpoint, which includes location, environment, preferences, goals, and companions, can increase the value and impact of any interface, including auditory interfaces as part of frequently-used assistive technologies.

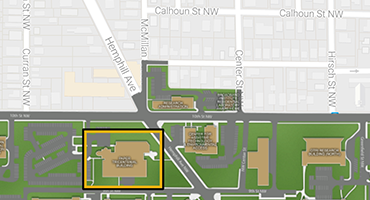

This project will develop market-ready interfaces that include auditory menus with entire sets of commands and features that can be clustered into an auditory-based operating system (OS) and incorporated into, and interact with, a range of wireless hardware and devices. The eyes-free, hands-free nature of this activity is expected to benefit blind and low vision users, for whom visual displays are frequently inaccessible, as well as people with cognitive disabilities and people with dexterity limitations. Development will investigate next-generation auditory interfaces, gesture-enhancement to audio interfaces, and deep-learning enhancements to audio-interfaces, which will advance technological discoveries developed as part of prior proof-of-concept work. Social acceptance and the pervasive use of auditory display technologies also will be explored as part of development activities. A foundation for the next iteration of the System for Wearable Audio Navigation (SWAN)—SWAN 2.0, will be designed, developed and build upon existing guidance and navigation functions with additional wayfinding capabilities that utilize new technologies, resulting in a finished prototype that may be brought to market.